UAE: Project to develop 3D avatars of teachers, students for virtual classes

MBZUAI professor says the tech could even create AI-powered avatars of historical figures

Dubai: A university professor in the UAE has shared details of a project to create 3D avatars of teachers and students - or even historical figures - for enhancing virtual classrooms.

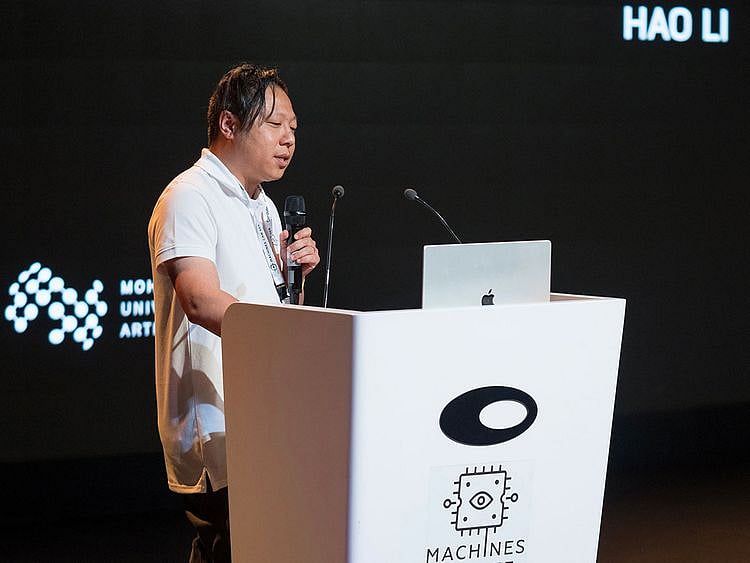

Hao Li, associate professor of Computer Vision at Mohammed bin Zayed University of Artificial Intelligence (MBZUAI), was speaking to Gulf News on the sidelines of the ‘Machines Can See’ conference at the Museum of the Future in Dubai.

A keynote speaker on computer vision at the summit, Li is also the CEO and founder of Pinscreen Technology, a US startup that builds cutting edge AI-driven virtual avatar technologies.

“One of the challenges we are keen to tackle with AI is access to education in remote areas,” said Li, who is also the director of a new Research Centre called the Metaverse Centre.

“Technologies including AI, virtual reality, and augmented reality are developing rapidly, while internet access is becoming more. This opens up the opportunity to bring transformative education solutions to children who may not have a place at a traditional school,” he pointed out.

“At MBZUAI, we are using AI to develop ways to create realistic 3D video and avatars from 2D images. This is important because creating 3D content for VR and AR is traditionally very expensive and time consuming. Using AI, we are working to reduce this cost and even create 3D content in real time.

“This will allow us to create realistic 3D avatars of teachers and students, paving the way for more engaging virtual classrooms. It will also allow for the creation of personalised, engaging, interactive 3D content.”

Historical figures

He added: “We can now create realistic 3D versions of historical figures such as Winston Churchill, allowing children to see and hear him deliver famous speeches, recreate key moments from history, or show the functioning of human organs in 3D.”

In many parts of the world, Li said, this type of technology could help bring high quality personalised education to children who would otherwise not have access to education at all. Where education systems are more developed, this technology can still be used to enhance education and support teachers, with immersive 3D content and tools that help bring education to life.

The use of Generative AI in education ultimately has the goal to create a customised and personalised learning experience that meets the unique needs of each individual, he pointed out.

“Imagine taking an online course where you don’t just watch videos, but you can interact with the person teaching in real time. It would feel like talking to another person, and this kind of experience would be beneficial. Some people worry that AI might violate their privacy, but in this case, it’s actually better. For example, if you have health issues and want to talk to someone, talking to an AI could be a great option because the AI wouldn’t be judgemental and would give you the best response, enabling you to talk as freely as you want.”

‘Human digitisation’

‘Human digitisation’ is about creating your own avatars from descriptions, from pictures, etc. Li said the most obvious application is in video games where you want to be yourself inside a video.

“That’s for entertainment purposes but one of the core capabilities is for telecommunication. So instead of having a Zoom meeting where everything is 2D, we can have a three-dimensional experience using Augmented and virtual reality headsets.”

“Another direction is, what if you can actually digitise yourself on your own? So those are technologies that allow you to create a digital version of yourself that you can use to communicate and interact with other people.”

He said the innovation on the website of Avatarneo allows for the creation of a more realistic avatar from a single photo without the need for neutral lighting conditions or the removal of any accessories.

Digital twin of Expo 2020 Dubai

Recalling the digital twin of the Expo 2020 Dubai, Li said he was also part of that human digitisation project in the UAE.

“We were part of a human digitisation project for Expo 2020 Dubai, which was undertaken by a company called Magnus. This project involved creating a complete digital twin of the Expo 2020 sites, covering an area of 4km by 4 km. The digital twin provided visitors with an augmented reality (AR) experience, allowing them to visit the pavilions on the website. We were responsible for building avatars, which visitors could use to take photos and create cartoon-like images. Overall, the project appears to have been a significant undertaking in the field of digitalisation and AR.”

Word of caution

In 2020, Li and his company, which is also a service company for platforms like Netflix, went to Davos at the World Economic Forum and demonstrated the first real-time face replacement technology.

However, he cautions that this can have dangerous applications, such as identity theft and spreading disinformation.

“But positive applications include film production. For example, movies can be dubbed in different languages with more human-like voices.”

Li’s company has dubbed an entire movie with this technology, from German/ Polish to English. They also did similar work for the French film, AKA.

He said an example of mapping an actor’s performance onto another face, is the movie Slumberlands where an adult actor’s performance was mapped onto the face of a toddler by his company.

“AI has limitations, and we need to understand its capabilities. We should be careful about believing everything we see online as it’s easy to generate fake content,” Li added.

iPhone Animoji

Talking about the technology that he developed for the Animoji feature on the iPhone, Li said: “That is a technology that I developed back in 2009-2010. I was doing my PhD at ETH Zurich and one of the things that was developing there was real-time feed tracking, and one specific thing is how we use 3D depth sensors to capture facial performances and map those onto either the face of the same 3D person or another 3D character. That basically became the startup company, which was later acquired by Apple and then incorporated as part of the Animoji technology.”

Since then 3D depth sensor hardware technology has been progressing in allowing us to see depth information in images and efforts are on to overcome the limitations in capturing big working volumes, said Li.

Sign up for the Daily Briefing

Get the latest news and updates straight to your inbox

Network Links

GN StoreDownload our app

© Al Nisr Publishing LLC 2026. All rights reserved.