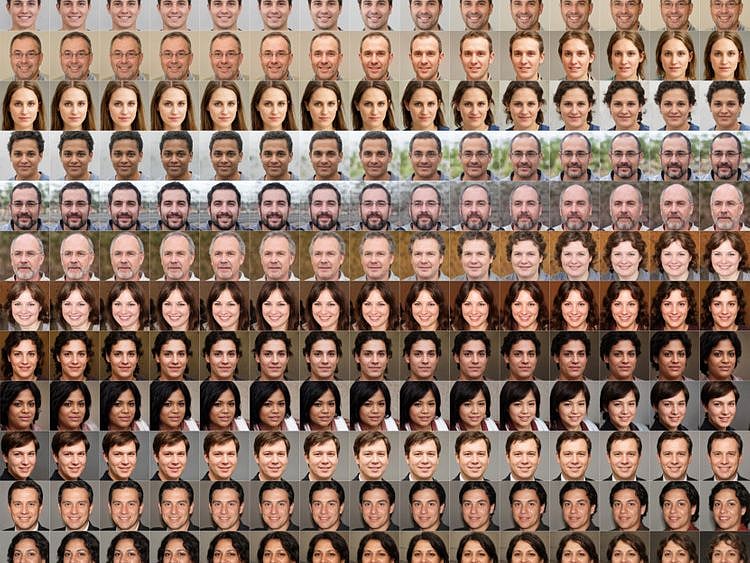

Washington: These people may look familiar. They may look like users you’ve seen on Facebook, Twitter or Tinder, or maybe people whose product reviews you’ve read on Amazon. They look stunningly real at first glance, but they do not exist. They were born from the mind of a computer.

There are now businesses that sell fake people. On the website Generated.Photos, you can buy a “unique, worry-free” fake person for $2.99, or 1,000 people for $1,000. If you just need a couple of fake people, for characters in a video game or to make your company website appear more diverse, you can get their photos free on ThisPersonDoesNotExist.com. Adjust their likeness as needed. Make them old, young or the ethnicity of your choosing. If you want your fake person animated, a company called Rosebud.AI can do that and can even make them talk.

These simulated people are starting to show up around the internet, used as masks by real people with nefarious intent: spies who don an attractive face in an effort to infiltrate the intelligence community; right-wing propagandists who hide behind fake profiles, photo and all; online harassers who troll their targets with a friendly visage.

These creations became possible only in recent years thanks to a new type of artificial intelligence called a generative adversarial network, or GAN. In essence, you feed a computer programme a heap of photos of real people. It studies them and tries to come up with its own photos of people, while another part of the system tries to detect which of those photos are of fake people. The back-and-forth makes the end product ever more indistinguishable from the real thing.

Given the pace of improvement, it’s easy to imagine a not-so-distant future in which we are confronted with not just single portraits of fake people but whole collections of them - at a party with fake friends, hanging out with their fake dogs, holding their fake babies. It will become increasingly difficult to tell who is real online and who is a figment of a computer’s imagination.

“When the tech first appeared in 2014, it was bad; it looked like the Sims,” said Camille Francois, a disinformation researcher whose job is to analyse the manipulation of social networks. “It’s a reminder of how quickly the technology can evolve. Detection will only get harder over time.”

Advances in facial fakery have been made possible in part because technology is now so much better at identifying key facial features. You can use your face to unlock your smartphone, or tell your photo software to sort through your thousands of pictures and show you only those of your child.

Facial recognition programmes

Facial recognition programmes are used by law enforcement to identify and arrest criminal suspects (and also by some activists to reveal the identities of police officers who cover their name tags in an attempt to remain anonymous). A company called Clearview AI scraped the web of billions of public photos, casually shared online by everyday users, to create an app capable of recognizing a stranger from one photo. The technology promises superpowers the ability to organise and process the world in a way that wasn’t possible before.

But facial-recognition algorithms, like other AI systems, are not perfect. Thanks to underlying bias in the data used to train them, some of these systems are not as good, for instance, at recognising people of color. In 2015, an early image-detection system developed by Google labeled two Black people as “gorillas,” most likely because the system had been fed many more photos of gorillas than of people with dark skin.

Moreover, cameras - the eyes of facial-recognition systems - are not as good at capturing people with dark skin. That unfortunate standard dates to the early days of film development, when photos were calibrated to best show the faces of light-skinned people. The consequences can be severe. In January, a Black man in Detroit named Robert Williams was arrested, accused of a crime he did not commit because of an incorrect facial-recognition match.

Artificial intelligence

Artificial intelligence can make our lives easier, but ultimately it is as flawed as we are, because we are behind all of it. Humans choose how AI systems are made and what data they are exposed to. We choose the voices that teach virtual assistants to hear, leading these systems not to understand people with accents. We label the images that train computers to see; they then associate glasses with “dweebs” or “nerds.” We design a computer program to predict a person’s criminal behaviour by feeding it data about past rulings made by human judges - and in the process baking in those judges’ biases.

Artificial intelligence makes mistakes, too, in those fake people it conjures up. There are common detectable flaws: earrings that do not match, a lack of symmetry, or a background that is distorted or eerily misshapen.

And humans err. We overlook or glaze past the flaws in these systems, all too quick to trust that computers are hyper-rational, objective, always right. Studies have shown that, in situations where humans and computers must cooperate to make a decision - to identify fingerprints or human faces - people consistently made the wrong identification when a computer nudged them to do so. In the early days of dashboard GPS systems, drivers famously followed the devices’ directions to a fault, sending cars into lakes, off cliffs and into trees.

Is this humility or hubris? Do we place too little value in human intelligence, or do we overrate it, assuming we are so smart that we can create things smarter still?

The algorithms of Google and Bing sort the world’s knowledge for us. Facebook’s newsfeed filters the updates from our social circles and decides which are important enough to show us. With self-driving features in cars, we are putting our safety in the hands (and eyes) of software.

We place a lot of trust in these systems, but they can be as fallible as us.

Sign up for the Daily Briefing

Get the latest news and updates straight to your inbox

Network Links

GN StoreDownload our app

© Al Nisr Publishing LLC 2026. All rights reserved.