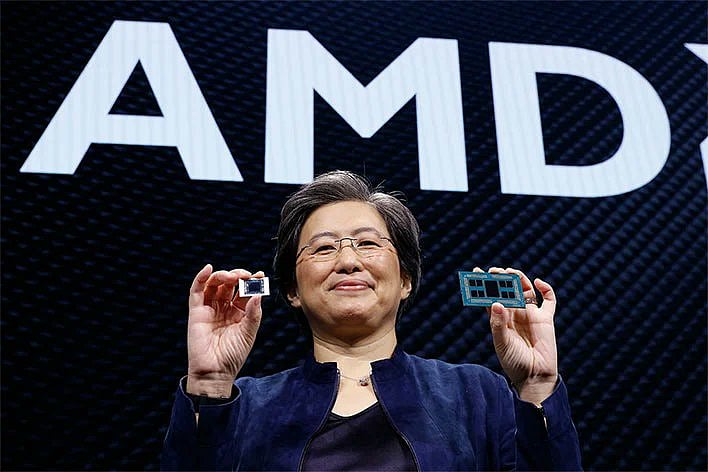

AMD secures $100 billion chip deal with OpenAI, stock soars 34%

Deal includes an option for OpenAI to acquire 10% of AMD’s shares via warrants

Advanced Micro Devices (AMD) has announced a groundbreaking multi-year, multi-generation strategic partnership with OpenAI, the AI pioneer behind ChatGPT, in a deal valued at $100 billion.

The agreement, unveiled on Monday, positions AMD as a key supplier of AI infrastructure, with OpenAI committing to deploy up to 6 gigawatts of AMD’s Instinct GPUs, starting with a 1-gigawatt rollout of the MI450 series in the second half of 2026.

The deal includes an option for OpenAI to acquire approximately 10% of AMD’s shares via warrants, contingent on deployment milestones and stock performance.

AMD’s stock surged 34% in pre-market trading on Monday, adding over $100 billion to its market capitalization, marking its largest single-day gain in nearly a decade.

The announcement comes amid a global AI infrastructure boom, with Citigroup projecting Big Tech’s AI spending to reach $2.8 trillion by 2029, fueled by demand for 55 gigawatts of new power capacity.

Collaboration

The partnership builds on years of collaboration, with OpenAI contributing to the development of AMD’s MI450 series, designed to excel in inference workloads — serving AI requests in real-time — where AMD’s chiplet technology offers a competitive edge.

Utilizing the Infinity Fabric interconnect, AMD’s chiplet architecture allows for cost-effective scaling of memory, outperforming Nvidia’s monolithic designs, according to industry analysts.

This shift aligns with a broader trend as pre-training gains plateau due to limited data growth, pushing the focus toward inference.

Nvidia, AMD’s rival and OpenAI’s existing partner, recently committed $100 billion to the AI firm, securing 10 gigawatts of compute capacity.

Diversification

OpenAI’s diversification to AMD reflects the industry’s voracious appetite for computing power, with Nvidia CEO Jensen Huang predicting $1 trillion in annual AI-chip spending by the decade’s end.

The deal also draws attention amid US-China tech tensions, as China’s Zhaoxin unveiled its KH-50000 chiplet-based processor, mirroring AMD’s EPYC design.

However, questions linger over OpenAI’s financials, which reported a $2.5 billion cash burn in the first half of 2025 despite $4.3 billion in revenue.

AMD executives hailed the deal as “transformative,” signaling a potential challenge to Nvidia’s 90%+ data center GPU market share, validated by industry voices like Higgsfield AI praising AMD’s inference performance.

Chiplet design

The company's "chiplet" design is based on manufacturing different parts of a chip on different nodes and connecting them together with a high-speed interconnect. This allows designers to use lower-cost processes like 5nm for less complex memory parts while using more expensive 3nm processes for cores.

AMD has reportedly developed the best-in-class interconnect, Infinity Fabric, to connect different parts together at high speed. This allowed AMD to pack more memory in a single chip while keeping the costs down, making it a perfect fit for inference workloads.

This is how MI355X offers considerably higher memory than Blackwell at a way lower cost. AMD's MI450 series, co-developed with OpenAI, targets inference dominance, a shift from training, as pre-training gains flatten due to limited data growth.

The OpenAI-AMD deal marks a significant shift in the AI hardware landscape, building on years of collaboration where OpenAI provided input for AMD's MI300X chip design.

This partnership comes amid a global AI infrastructure race, with Citigroup forecasting Big Tech's AI spending to hit $2.8 trillion by 2029, driven by demand for 55 gigawatts of new power capacity.

Sign up for the Daily Briefing

Get the latest news and updates straight to your inbox

Network Links

GN StoreDownload our app

© Al Nisr Publishing LLC 2026. All rights reserved.