Is this the 'Nvidia killer'? Cerebras’ wafer-scale AI engine sparks supremacy showdown

World's largest single chip: an entire silicon wafer with 2.6T transistors, 900K cores

Cerebras Systems is igniting an AI revolution in a the way no other hardware company has done till now.

Here's the AI story so far: It is mostly built on "training", based on "large-language models" (LLMs) using hundreds of thousands of computer graphics processing units (GPUs) arrayed in a "cluster" or "stack" (racks).

These computers working in parallel are usually housed in power-hungry "data centres" and are kept under strict temperature controls.

AI training vs AI inference

But AI "training" is about to be eclipsed by AI "inference".

This trend is expected to reshape the $100-billion AI silicon market.

What's the difference? AI training builds a model by learning patterns from vast datasets (LLMs), requiring intense computation (GPUs/TPUs) over long periods. AI inference uses that finished model to make quick predictions or decisions on new data; it demands efficiency and low latency, often continuously in production.

While training develops the AI's "brain," an infrequent, resource-heavy process, inference is its "application" phase, running constantly in real-world scenarios.

Cerebras is betting on AI inference. Now, OpenAI's $10-billion investment in Cerebras confirms they're not alone in the AI inference game.

Post-Nvidia era?

Nvidia, of course, is currently riding the wave of AI. Its GPUs rule the industry, an AI training ecosystem that makes Nvidia the world's most valuable company, with a market capitalisation of $4.53 trillion (as of 12:17 am EST, Jan. 20, 2026).

The chip "architecture" followed by Nvidia and its peers — like Broadcom, ASML, Samsung and AMD — is fuelled by a well-estabhished technique, known as "clustering", or stacking.

If one or several of these chips fail within the cluster, it continues to do it work in a sort of "swarm".

So Tesla's "Colossus", the most powerful data centre in the world, a supercomputer in Memphis, Tennessee, behind xAI’s Grok (separate from, but linked to Tesla/X), operates 200,000 Nvidia H100 GPUs.

Reports indicate a total capacity of 555,000 NVIDIA GPUs by January 2026.

What is GPU clustering?

GPU clustering links multiple GPUs into a massively parallel processing system crucial for AI training.

This allows the platform to accelerate complex computations on extensive datasets, enabling faster model development, and handling tasks like neuro-linguistic programming (NLP) and computer vision.

A GPU cluster connects many servers (nodes), using high-speed networks (like InfiniBand or NVLink) to function as a single, massive supercomputer.

This architecture distributes large AI workloads across thousands of GPU "cores", allowing them to work in parallel, drastically reducing training times for complex models.

Nvidia is tops in the GPU clustering cgame, particularly in high-bandwidth memory (HBM) required for the current generation of AI platforms.

Key differentiator: Chip architecture

While Nvidia scales via thousands of H100/H200 GPU clusters fighting memory bottlenecks, Cerebras delivers one dinner-plate-sized processor with:

900,000 AI cores

4 trillion transistors

44GB on-chip SRAM (no HBM lag)

1,000x memory bandwidth

OpenAI tests confirm order-of-magnitude inference speed gains over GPU clusters — critical for real-time ChatGPT responses where "latency" kills user experience.

With OpenAI's $10-billion bet on Cerebras, it signals a post-Nvidia era that revolves around "clustering" of GPUs.

Breaking away

Cerebras breaks away from the traditional route altogether with a visionary chip design that leapfrogs GPU clusters.

Instead, Cerebras belongs to a league of its own, redefining AI hardware, challenging Nvidia's dominance — with its radical wafer-scale innovation.

Silicon wafer

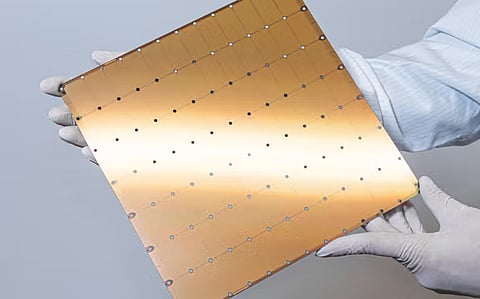

Founded in 2015 by Andrew Feldman and a team of chip wizards, Cerebras burst onto the scene with its revolutionary WSE — the world's largest single chip, spanning an entire silicon wafer (46,225 mm²) packed with 2.6 trillion transistors and 900K cores.

Why is it dubbed the 'Nvidia killer'?

Dubbed the "Nvidia killer," Cerebras' wafer-scale engine has reportedly crushed Nvidia's H200 in raw AI training power: 125 petaflops FP16 vs. H200's 4 petaflops, 44GB on-chip SRAM (no HBM bottlenecks).

Because of its inherent design, the WSE system allows for 20x faster scaling for massive LLMs without multi-GPU headaches.

Cerebras' CS-3 (2024) slashes training times from months to days, targeting trillion-parameter models for "hyperscalers" like Apple, IBM and Meta or Google, Oracle and Alibaba.

Given its potential, angel investors are lining up.

Cerebras Systems has seen its valuation skyfocket: From Series A (2016) to G (2025), its valuation jumped from $4B to $8B.

Hype vs reality

The "killer" hype stems from solving Nvidia's memory-bandwidth walls: WSE's single-chip design eliminates interconnect losses (NVLink overhead), enabling seamless "exascale" AI on one box.

So while Nvidia's H200 shines in GPUs' ecosystem/software, Cerebras claims 1,000x memory bandwidth edge for frontier research.

Timeline

2016: Series A funding ($21M)

2021: $250M at $4-billion valuation

2023: $720M to $4.1-billion valuation

Sept 2024: $750M-$1 billion raised, at $7-$8-billion valuation

Oct 2024: $1.1B to $8.1B post-CS-3, IPO withdrawn (due to outdated filings + CFIUS review)

2026: $1 billion private round, IPO refiling eyed Q2 2026 post private round; current private valuation ~$8 billion +

Nvidia's CUDA: A moat to Cerebras' WSE

Nvidia's CUDA (Compute Unified Device Architecture) is a parallel computing platform and API (Application Programming Interface) that lets developers use NVIDIA GPUs for general-purpose processing, not just graphics.

Nvidia is the industry standard with a massive, battle-tested ecosystem, optimised libraries (like cuDNN), and deep integration with all major AI frameworks (PyTorch, TensorFlow).

In terms of architecture, CUDA uses multiple, interconnected GPUs (like H100, B200) linked by high-speed NVLink. This requires complex coordination.

Cerebras and its supporters are betting that wafer-scale will disrupt the $100 billion AI chip market.

If its IPO fires (delayed in 2024 following a US probe about China ties), investors could see its value skyrocket.