Coronavirus: Here is why you need to trust experts

Misplaced emotions and limited knowledge on China virus can worsen irrational behaviour

When it comes to making decisions that involve risks, we humans can be irrational in quite systematic ways — a fact that the psychologists Amos Tversky and Daniel Kahneman famously demonstrated with the help of a hypothetical situation, eerily apropos of today’s coronavirus epidemic, that has come to be known as the Asian disease problem.

Professors Tversky and Kahneman asked people to imagine that the United States was preparing for an outbreak of an unusual Asian disease that was expected to kill 600 citizens. To combat the disease, people could choose between two options: a treatment that would ensure 200 people would be saved or one that had a 33 per cent chance of saving all 600 but a 67 per cent chance of saving none. Here, a clear favourite emerged: Seventy-two per cent chose the former.

But when Professors Tversky and Kahneman framed the question differently, such that the first option would ensure that only 400 people would die and the second option offered a 33 per cent chance that nobody would perish and a 67 per cent chance that all 600 would die, people’s preferences reversed. Seventy-eight per cent now favoured the second option.

As news about the virus’s toll in China stokes our fears, it makes us not only more worried than we need be about contracting it, but also more susceptible to embracing fake claims and potentially problematic, hostile or fearful attitudes toward those around usDavid DeSteno

This is irrational because the two questions don’t differ mathematically. In both cases, choosing the first option means accepting the certainty that 200 people live, and choosing the second means embracing a one-third chance that all could be saved with an accompanying two-thirds chance that all will die. Yet in our minds, Professors Tversky and Kahneman explained, losses loom larger than gains, and so when the options are framed in terms of deaths rather than cures, we’ll accept more risks to try to avoid deaths.

Fear factor

Our decision making is bad enough when the disease is hypothetical. But when the disease is real — when we see actual death rates climbing daily, as we do with the coronavirus — another factor besides our sensitivity to losses comes into play: fear.

The brain states we call emotions exist for one reason: to help us decide what to do next. They reflect our mind’s predictions for what’s likely to happen in the world and therefore serve as an efficient way to prepare us for it. But when the emotions we feel aren’t correctly calibrated for the threat or when we’re making judgements in domains where we have little knowledge or relevant information, our feelings become more likely to lead us astray.

Let me give you an example. In several experiments, my colleagues and I led people to feel sad or angry by having them read a magazine article that described either the impact of a natural disaster on a small town or the details of vehement anti-American protests abroad.

Next, we asked them to estimate the frequencies of events that, if they occurred, would typically make people feel sad (for example, the number of people who will have to euthanize a beloved pet this year) or angry (for example, the number of people who will be intentionally sold a “lemon” by a dishonest car dealer this year) — estimates for which people wouldn’t already hold a knowledgeable answer.

Emotional overtones

Time and again, we found that when the emotion people felt matched the emotional overtones of a future event, their predictions for that event’s frequency increased. For instance, people who felt angry expected many more people to get swindled by a car dealer than did those who felt sad, even though the anger they felt had nothing to do with cars. Likewise, those who felt sad expected more people to have to euthanize their pets.

Fear works in a similar way. Using a nationally representative sample in the months following Sept. 11, 2001, the decision scientist Jennifer Lerner showed that feeling fear led people to believe that certain anxiety-provoking possibilities (for example, a terrorist strike) were more likely to occur.

Such findings show that our emotions can bias our decisions in ways that don’t accurately reflect the dangers around us. As of Monday, only 12 people in the US were confirmed to have the coronavirus, and all have had or are undergoing medical monitoring.

Yet fear of contracting the virus is rampant. Throughout the US, there’s been a rush on face masks (most of which won’t help against the virus), a hesitance to go into crowded places and even a growing suspicion that any Asian might be a host for the virus.

Don’t get me wrong: Certain quarantine or monitoring policies can make great sense when the threat is real and the policies are based on accurate data. But the facts on the ground, as opposed to the fear in the air, don’t warrant such actions. For most of us, the seasonal flu, which has killed as many as 25,000 people in the US in just a few months, presents a much greater threat than does the coronavirus.

Emotion-induced bias

You might think that the best way to solve the problem is to get people to be more deliberative — to have them think more carefully about the issues involved. Unfortunately, when it comes to this type of emotion-induced bias, that strategy can make matters worse. When people spend more time considering an issue but don’t have the relevant facts at hand to make an informed decision, there are more opportunities for their feelings to fill in the blanks.

To demonstrate this, my colleagues and I conducted another series of experiments, in which we presented sad, angry or emotionally neutral people with a government proposal to raise taxes.

In one version of the proposal, we said the increased revenue would be used to reduce “depressing” problems (like poor conditions in nursing homes). In the other, we focused on “angering” problems (like increasing crime because of a shortage of police officers).

As we expected, when the emotions people felt matched the emotion of the rationales for the tax increase, their attitudes toward the proposal became more positive. But the more effort they put into considering the proposal didn’t turn out to reduce this bias; it made it stronger.

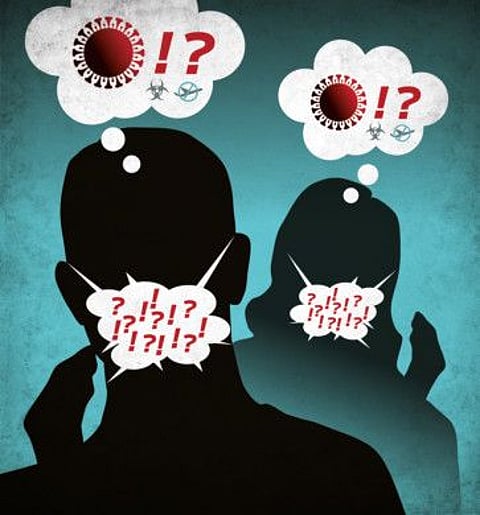

The mix of miscalibrated emotion and limited knowledge, the exact situation in which many people now find themselves with respect to the coronavirus, can set in motion a worsening spiral of irrational behaviour.

As news about the virus’s toll in China stokes our fears, it makes us not only more worried than we need be about contracting it, but also more susceptible to embracing fake claims and potentially problematic, hostile or fearful attitudes toward those around us — claims and attitudes that in turn reinforce our fear and amp up the cycle.

So how to fix the problem? Again, the solution isn’t to try to think more carefully about the situation. Most people don’t possess the medical knowledge to know how and when to best address viral epidemics, and as a result, their emotions hold undue sway. Rather, the solution is to trust data-informed expertise. But in today’s world, I worry a firm trust in expertise is lacking, making us too much the victim of fear.

— New York Times News Service

David DeSteno is a noted academic. He teaches Psychology at Northeastern University