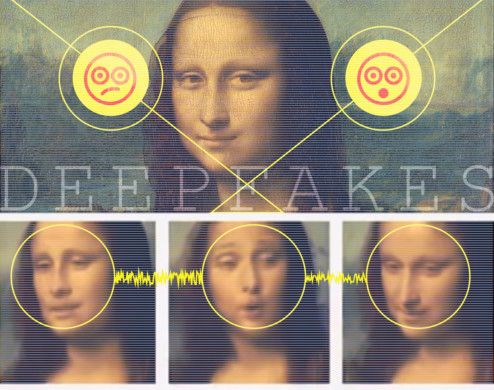

Dubai: From Morgan Freeman to Tom Cruise … celebrities have had their videos published all across the internet, claiming to say things that they have never said. How? Deepfake technology.

Tom Cruise’s deepfakes, in fact, are so popular that they even have a dedicated Tiktok channel. The videos show him getting a haircut, dancing in a bathrobe outside a house or trying out a Spanish or Australian accent. All highly believable, all fake … synthesised videos using machine learning technology. And it is all for fun, right?

But the deepfake technology isn’t just turning celebrities into fodder for entertainment. How many of us have tried placing our face on the Mona Lisa on an app on our phones? Or used the ‘face swap’ feature with a friend for a fun video?

With deepfake technology now a part of everyday life, how good are you at spotting a fake video from a real one? If reports are to be believed, you may not be as good as you think you are.

How deepfakes have duped people

“Global sentiment may suffer from overestimation, as 52 per cent of people believe they can confidently distinguish AI-generated content from genuine human creations,” Candid Wüest, Switzerland-based vice president of Cyber Protection Research at Acronis, a company that specialises in cyber protection, told Gulf News.

“This tendency echoes the mindset of those who mistakenly believe they are immune to phishing emails or website scams. As the quality of artificially generated content improves with each passing month, we witness tangible examples of this progress,” Wüest added.

To drive the point home, Wuest shared just a few examples of deepfake scams that have been observed in the past few years – from financial scams to social manipulation.

“Deepfake voice patterns were cunningly exploited in a CEO Fraud scheme, where scammers skillfully mimicked the voice of a high-ranking company executive. This ruse convinced the unsuspecting finance department to facilitate a transfer of a staggering $35 million (Dh128.5 million). In a striking example of political manipulation, deepfake video calls portrayed politicians engaging in fabricated conversations. These deceptive video calls were then employed to mislead other politicians and shape diplomatic narratives. More audaciously, cyber criminals have exploited the fame of individuals like Elon Musk, where they pose as him to promote Bitcoin scams, duping people into believing that sending money will result in receiving free Bitcoins,” Wüest said.

“These are a few examples that underscore the gravity of the issue and emphasise the imperative to address the rising prevalence of deepfakes in the digital landscape,” he added.

However, as the 2021 Deepfake guide, issued by the UAE’s National Programme for Artificial Intelligence, notes, “It is a new form of an old problem related to the distribution of fake media content.”

This technological advancement carries profound implications for our society. On one hand, it intensifies the erosion of trust in information sources, as individuals become more wary of the authenticity of digital content. On the other hand, it provides a potent means for various entities to exert even greater influence over public opinion and discourse.

So, if you want to better understand just what the technology is, and how you can protect yourself, here are some top tips.

A deepfake has no basis in reality, even though it appears very realistic and convincing.

Source: 2021 Deepfake guide released by UAE’s National Programme for Artificial Intelligence

“Deep fakes continue to be used across a variety of scams, including fake voice messages to enable payment fraud, video messages which are used to catfish people online and to create fake viral clips which are utilised to push political or similar messaging,” Quentyn Taylor, senior director - Information Security and Global Response at Canon for Europe, Middle East and Africa, told Gulf News.

There are different forms that a deepfake can take, according to Wüest, including the following:

● Video deepfakes: This is the process of transforming or generating new videos by manipulating existing footage, resulting in content that appears authentic, featuring individuals engaging in actions or making statements they never actually did or make.

● Image deepfakes: These alterations manipulate images, placing individuals in scenarios they never experienced.

● Voice deepfakes: AI-generated voices mimic specific individuals, enabling the fabrication of statements they never uttered.

● Audio-Visual deepfakes: These encompass both voice and video manipulation, culminating in outcomes that are exceptionally lifelike and persuasive.

While deep learning technology can make it hard to spot a fake video from a real one, there are certain red flags that you need to look out for.

Deep fakes continue to be used across a variety of scams, including fake voice messages to enable payment fraud, video messages which are used to catfish people online and to create fake viral clips which are utilised to push political or similar messaging.

4 ways to spot a deepfake

1. Unusual facial expressions

“Keep an eye out for facial movements that seem out of place or don’t quite match the spoken words. In some deepfakes, the expressions might not sync naturally with the audio, giving you a hint that something might be off,” Wüest said.

2. Weird visual distortions

“Deepfakes can occasionally exhibit peculiar visual anomalies or glitches within videos or images. These anomalies could manifest as irregular colour shifts, unusual lines, or inconsistent textures. The presence of any atypical or inconsistent visual elements could suggest content manipulation,” Wüest said.

3. Lip-syncing issues

“One key aspect to watch for is the synchronisation of lip movements with the spoken words. If the lips don’t match the speech accurately, it might be an indication that the video has been tampered with,” Wüest said.

4. Sudden change in behaviour

Take note of sudden changes in behaviour, such as requests for urgent financial assistance from individuals without a history of making such requests. Be cautious if something seems out of character.

“The key to identifying a deepfake is context. Whilst some tools make it very obvious through the likes of fingers and feet – which AI still struggles to generate properly – these can be easily corrected which can make deepfakes harder to identify. Always think of the context of the image, video or voice clip that has been sent in, as this will often help you identify if the clip is real or not. And remember the key principle around all scams: if it feels too good to be true, it very well might be,” Taylor said.

1. Pictures of people

2. Video clips

3. Audio clips

This data is often spread widely on social media. Generally speaking, the more data a malicious person obtains, the greater the quality of the deepfake audio and video, resulting in a greater chance of experiencing a deep falsification that is close to reality. Like any algorithm, AI deepfake technology needs to be trained to perform. Therefore, people must be aware that the more they use these different applications and the more they post pictures online, the more data they are providing for potential deepfake framing material.

Source: Deepfake Guide 2021, National Programme for Artificial Intelligence

3 best practices to follow

1. Always double check the source

In the age of deepfakes, it is best to always employ critical thinking and double check sources. For example, if someone claims to know specific details that were never discussed or revealed, it could be a sign of manipulation. Also, when it comes to videos being shared on social media, verify its source to ensure credibility.

“By using multiple sources to verify the information or content, such as someone’s other social media profiles or a simple Google search to verify information, you can cross-check the information to ensure it is real. In a news context, it’s important to remember that a deepfake can rarely change a narrative, but it can support an existing narrative where people may not have access to the primary source material. If you’re unsure, double check with other news sources or social media channels to validate the message. Make sure to question things you see online, and if something feels off, it probably is,” Taylor said.

2. Use tools that can identify manipulated media

According to the UAE’s deepfake guide, although physical examination of videos is possible, it can be a slow process. “The most accurate approach to detect forged contents is through a systematic screening of the deepfakes using AI-based tools that need to be regularly updated,” the guide states.

Wüest, too, spoke about tools that have been designed by organisations like Microsoft, Google, Facebook, and Adobe, that can help with deepfake detection.

“These tech giants are creating tools and algorithms that assess different elements of media content, like facial expressions and audio inconsistencies, to spot potential manipulation. Although these tools are not foolproof and the cat-and-mouse game with deepfake creators continues, they represent significant strides in the ongoing battle against digital deception,” Wüest said.

3. Secure your communication

“Employ strong authentication methods to safeguard against impersonation attempts. Embracing biometric solutions, like face ID for smartphone access, offers enhanced security over traditional 4-digit pin codes. It's worth noting that many AI-driven attacks are yet to effectively scale for mass exploitation,” Wüest said.

While there are several ways in which this technology can be used for deceiving people, there are also several positive applications.

“I think it’s important to remember that deepfakes may not always be bad,” Taylor said.

“For example, deepfakes can be used in filmmaking during post-production by allowing an actor to speak in a different language, or to seamlessly integrate old and new footage. Sadly, as we see so many negative instances with deepfakes, we rarely consider the positive use of deepfakes, which is a real shame,” he added.